Optimize Product Development with Teamcenter

Make your manufacturing and Engineering-to-Order projects smoother with Siemens Teamcenter. Teamcenter helps you work better together, cut costs, and scale as your needs change.

The CLEVR way: From vision to value

At CLEVR, we don’t just implement technology—we enable transformation. Our approach ensures that companies don’t just digitize but truly evolve by embedding Low Code, PLM, and MOM solutions in a structured, scalable way.

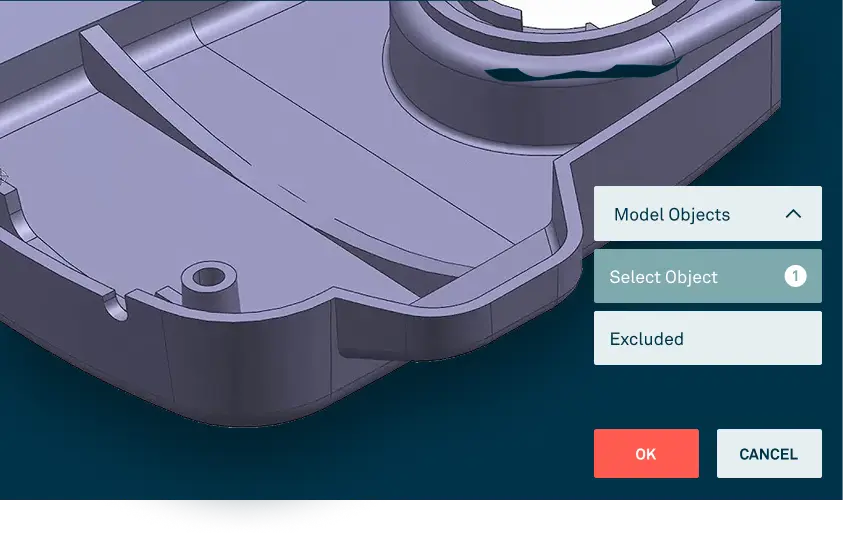

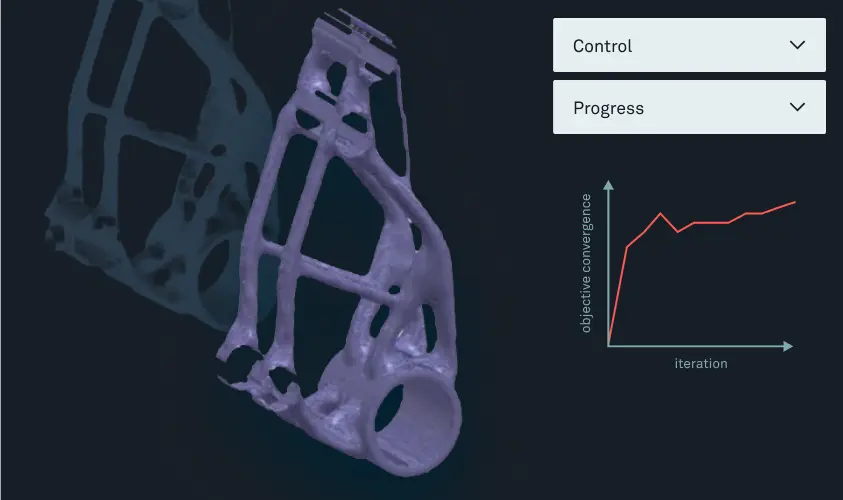

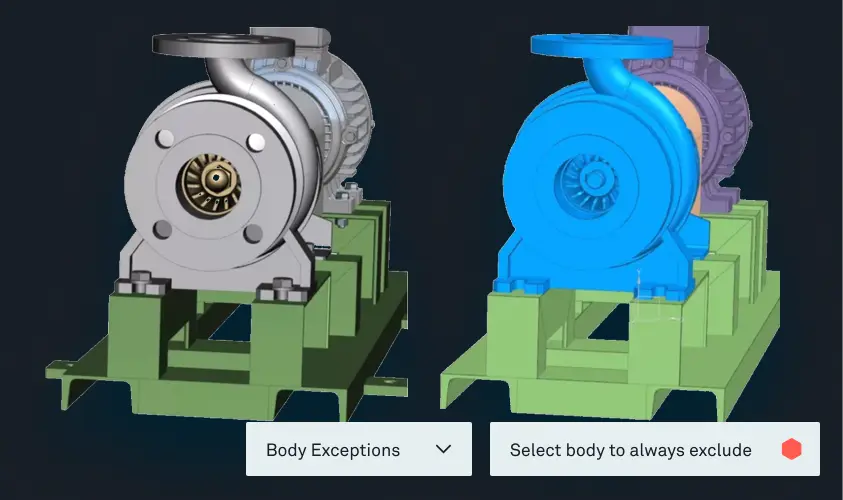

Key NX Features

Integrated Design, Simulation, and Manufacturing

Combine all aspects of product development into a single environment, reducing design iterations and accelerating time-to-market.

Integrated Design, Simulation, and Manufacturing

Combine all aspects of product development into a single environment, reducing design iterations and accelerating time-to-market.

Integrated Design, Simulation, and Manufacturing

Combine all aspects of product development into a single environment, reducing design iterations and accelerating time-to-market.

Integrated Design, Simulation, and Manufacturing

Combine all aspects of product development into a single environment, reducing design iterations and accelerating time-to-market.

Why CLEVR?

- Proven Expertise: 20 years of low code experience, 3,500+ applications delivered.

- Tailored Solutions: A unique "Vision to Value" methodology ensuring measurable results.

- Global Recognition: Mendix Platinum Partner, awarded Best BNL Partner 2024.

- Customer Satisfaction: Score of 8.8 out of 10, reflecting our commitment to excellence.

- Certified Professionals: The largest team of Mendix expert developers and MVPs.

- Proven Expertise: 20 years of low code experience, 3,500+ applications delivered.

Compare licensing plans

Advanced

Create and edit designs of typical 3D parts and assemblies and more with NX X Design Standard.

Standard

Create and edit designs of typical 3D parts and assemblies and more with NX X Design Standard.

Premium

Create and edit designs of typical 3D parts and assemblies and more with NX X Design Standard.

Verhalen van onze klanten

Bekijk hoe bedrijven zoals het uwe veranderen met CLEVR.

CLEVR suggested some new ways we could use Teamcenter that we hadn't seen before

Mendix allows us to rapidly adapt to new legal demands and security updates.

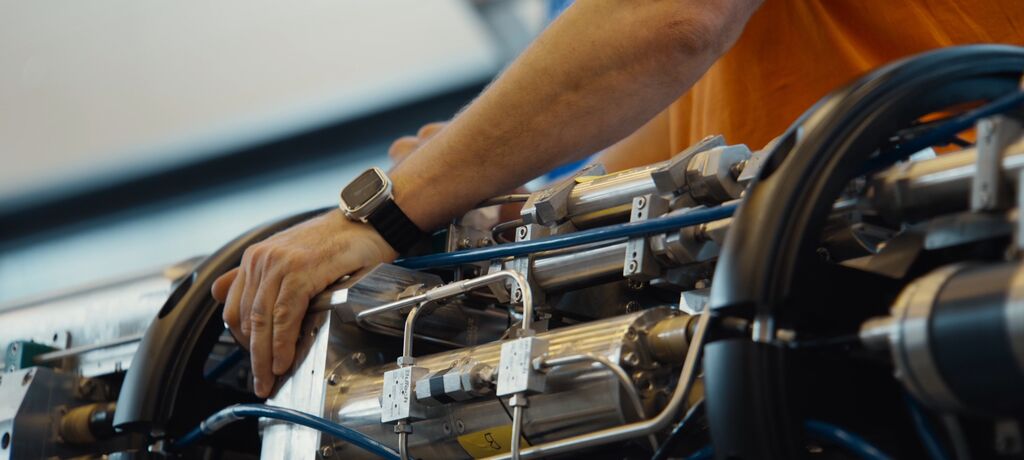

I think we build tomorrow together in different ways. We try to build the future by providing equipment to produce green hydrogen to enable the green transition, and CLEVR with the information technology will help us to do that efficiently

Find out how CLEVR can drive impact for your business

We try to build the future by providing equipment to produce green hydrogen to enable the green transition.

Related Resources

Mendix versus PowerApps: welk Low-Code-platform wint?

De ontwikkeling van low code heeft een keerpunt bereikt voor ondernemingen. Nu uw concurrentie de voordelen van snelle ontwikkeling omarmt, is het niet langer een kwestie van of uw bedrijf zou low-code-tools moeten gebruiken, maar welke zal de basis vormen voor je toekomst.

Mendix en Microsoft PowerApps behoren tot de topkeuzes die beide beloven dat ze sneller kunnen bouwen en dat er minder ouderwetse codering nodig is.

Maar wat is beter voor jouw behoeften?

In deze vergelijkingsgids wordt gekeken naar de sterke punten van het platform, de kosten en de overwegingen die u moet maken om u te helpen bij het kiezen van de juiste basis voor uw online abonnementen.

Heb je weinig tijd? Hier is een kort overzicht

- Positionering van het platform: Mendix is een topplatform voor complexe toepassingen. PowerApps is een kleinere, ontwikkelaarsvriendelijke oplossing binnen de Microsoft-wereld.

- Kostendynamiek: PowerApps lijkt vaak „gratis” bij Microsoft 365-licenties, maar premium connectors en Dataverse kunnen aanzienlijke verborgen kosten met zich meebrengen. Mendix biedt duidelijke, maar hogere prijzen vooraf.

- Geschikt voor gebruik: Kies PowerApps voor eenvoudige workflows binnen Microsoft spaces. Kies Mendix voor complexe, geïntegreerde toepassingen met meerdere apparaten waarvoor bedrijfsbrede controles nodig zijn.

- Besluitvormingskader: De platformkeuze hangt af van de complexiteit van de app, de afstemming van het systeem, de besturingsbehoeften en de totale kosten die verder gaan dan de eerste licentieverlening.

Platformoverzichten

Mendix: Hoogwaardig ontwikkelingsplatform

Mendix werkt als een uniform, modelgestuurd platform ontworpen voor het volledige softwarebouwproces. De focus ligt op visuele app-modellering, waarbij het model zelf de app wordt, zodat er weinig tot geen code hoeft te worden gemaakt.

Het platform ondersteunt het creëren van responsieve webapplicaties, progressieve webapps en mobiele applicaties vanuit één model. Professionele ontwikkelaars kunnen functies uitbreiden met aangepaste Java-acties en op React gebaseerde widgets, terwijl ze voornamelijk in de visuele bouwruimte werken.

Het platform is gericht op organisaties die bedrijfsapplicaties bouwen die brede systeemverbindingen, complexe bedrijfslogica en meerjarig levenscyclusbeheer nodig hebben.

PowerApps (Microsoft Power Platform): tool die geschikt is voor burgers en ontwikkelaars

Microsoft PowerApps maakt deel uit van de bredere Power Platform-suite. Het richt zich op toegang voor zakelijke gebruikers en burgerontwikkelaars.

Het belangrijkste voordeel van PowerApps voor veel bedrijven is het nauwe verbinding met het Microsoft-ecosysteem. Organisaties die al Microsoft 365, SharePoint, Teams en Dynamics 365 gebruiken, kunnen gebruikmaken van bestaande gegevensbronnen en gebruikersaanmelding zonder extra investeringen in de installatie.

De low-code-methode van het platform maakt gebruik van Power Fx, een formuletaal die lijkt op Excel. Dit maakt het toegankelijk voor gebruikers die bekend zijn met de productiviteitstools van Microsoft. Deze eenvoud kan echter beperkend worden naarmate de app-behoeften complexer worden. Het vereist vaak technische kennis en professionele ontwikkelaarshulp voor geavanceerde scenario's.

Bruikbaarheid en ontwikkelaarservaring

Mendix

Mendix biedt een Dual-IDE (geïntegreerde ontwikkelomgeving) methode gericht op verschillende gebruikerstypes.

Mendix Studio biedt zakelijke gebruikers een eenvoudige webruimte om modellen te maken en samen aan de behoeften te werken. Ondertussen concentreert de professionele ontwikkelervaring zich op Mendix Studio Pro, een complete desktop-IDE waar complexe applicaties worden gebouwd. Het beschikt over ingebouwde visuele modellering voor datadomeinen, bedrijfslogica (microflows) en gebruikersinterfaces binnen één ruimte.

Er is ook MXAssist, de AI-aangedreven ontwikkelingshulp van Mendix, die realtime tips biedt en routinematige ontwikkelingstaken automatiseert. Het platform bevat ingebouwde tools voor foutopsporing, geautomatiseerde testframeworks en op Git gebaseerd versiebeheer.

De leercurve is hier steiler dan bij PowerApps. Deze complexiteit maakt het echter mogelijk om bedrijfsapplicaties te creëren die kunnen worden geschaald om aan duizenden gebruikers en complexe integratiebehoeften te voldoen.

PowerApps

PowerApps stelt gebruiksgemak op de eerste plaats dankzij bekende ontwerppatronen van Microsoft. Canvas-apps maken gebruik van drag-and-drop interfaces vergelijkbaar met PowerPoint, terwijl modelgestuurde apps automatisch interfaces creëren op basis van datastructuren in Microsoft Dataverse.

Ontwikkeling gebeurt voornamelijk binnen de webgebaseerde PowerApps Studio, maar het bouwen van complete oplossingen vereist vaak werkt via meerdere interfaces: PowerApps voor gebruikersinterface, Power Automate voor bedrijfslogica en het Power Platform Admin Center voor besturingselementen.

Toepassing van de gebruikssituatie en complexiteit van de toepassing

Wanneer Mendix uitblinkt

Mendix wordt het best gebruikt in toepassingen met een gemiddelde tot hoge complexiteit die brede systeemverbindingen, slimme bedrijfslogica en implementatie via meerdere kanalen. Het modelgestuurde ontwerp van het platform gaat goed om met complexe datarelaties, gedetailleerde workflows en prestatiegevoelige scenario's.

Het is ook uitstekend geschikt voor klantenportalen die verbinding maken met SAP-systemen, mobiele buitendiensttoepassingen met offline mogelijkheden en casemanagementsystemen die meerdere afdelingen omvatten. Bovendien is de kracht van het platform in native mobiele ontwikkeling het gebruik van React Native levert topprestaties op in vergelijking met hybride alternatieven.

Productiebedrijven zoals Nel Hydrogen heb ook Mendix gebruikt naast systemen voor productlevenscyclusbeheer (PLM) om productontwikkelingsprocessen te moderniseren.

Wanneer PowerApps uitblinkt

PowerApps blinkt uit in eenvoudigere scenario's waarbij snelle implementatie en soepele integratie met het Microsoft-ecosysteem voorrang hebben op de slimheid van apps. Digitalisering van formulieren, goedkeuringsworkflows en apps voor gegevensverzameling zijn ideale PowerApps-gebruiksscenario's.

De kracht van het platform ligt in het optimaal benutten van bestaande investeringen van Microsoft. Toepassingen die voornamelijk SharePoint-gegevens verbruiken, integreren met Teams voor samenwerking of de Dynamics 365-functionaliteit uitbreiden, kunnen snel worden geïmplementeerd met minimale ontwikkeling op maat.

Organisaties met een gevestigd Microsoft 365-gebruikersbestand vaak wordt standaard gebruik gemaakt van PowerApps voor afdelingsapplicaties, waarbij gebruik wordt gemaakt van de meegeleverde licenties en vertrouwde gebruikersinterfaces.

Integratie en ecosysteem

Mendix

Mendix is platformneutraal en ontworpen voor gemengde bedrijfsruimten. Het platform levert sterke kant-en-klare connectiviteit met systemen van grote bedrijven — met name SAP, Oracle en Salesforce — via standaardprotocollen zoals REST, SOAP en OData.

De Mendix Marketplace biedt ook duizenden vooraf gebouwde connectoren en modules. Deze omvatten een breed scala aan Amazon Web Services (AWS) en AI-serviceverbindingen.

En voor unieke behoeften stelt de Connector Kit van Mendix professionele ontwikkelaars in staat om aangepaste verbindingen te bouwen met behulp van Java.

PowerApps

De kenmerkende eigenschap van PowerApps is de nauwe verbinding met SharePoint-, Teams-, Dataverse- en Azure-services. Deze native connectiviteit maakt een snelle ontwikkeling mogelijk van toepassingen die aanvoelen als een natuurlijke uitbreiding van de Microsoft productiviteitssuite.

Bij de meeste Microsoft 365-licenties zijn standaardconnectoren voor Microsoft-services inbegrepen. Het platform verwerkt workflows voor inloggen, delen van gegevens en samenwerking zonder extra instellingen.

Maar deze kracht zorgt voor problemen met de lock-in van leveranciers. Hoewel PowerApps verbindingen met niet-Microsoft-systemen ondersteunt via hoogwaardige connectoren, missen deze verbindingen vaak de diepte en prestatie-afstemming die beschikbaar is voor de eigen tools van Microsoft.

Schaalbaarheid, bestuur en CI/CD

Mendix

Mendix integreert bedrijfscontroles als een basisontwerpprincipe. Het platform Versiebeheer op basis van Git maakt professionele ontwikkelingspraktijken mogelijk, waaronder workflows voor vertakking, samenvoeging en teamontwikkeling.

Daarnaast omvat de ingebouwde ruimte voor applicatielevenscyclusbeheer (ALM) Agile projectbeheertools, geautomatiseerde testkaders en CI/CD-pijplijnen (continue integratie en continue levering/implementatie).

Mendix Pipelines biedt ook ingebouwde implementatieautomatisering en het platform ondersteunt verbinding met externe DevOps-tools zoals Jenkins en GitLab.

Tot slot kunnen organisaties Mendix-toepassingen implementeren in openbare clouds, privéclouds of installaties op locatie. Dit biedt volledige controle over de locatie van gegevens en het afstemmen van de prestaties.

PowerApps

De besturingselementen van PowerApps werken via het Power Platform Admin Center en het DLP-beleid (Data Loss Prevention).

De Center of Excellence (CoE) starterkit biedt controlemogelijkheden via PowerApps gebouwde toepassingen om de acceptatie te volgen en het applicatieportfolio te beheren. Dit vereist echter aanzienlijke inspanningen op het gebied van installatie en aanpassing in vergelijking met de ingebouwde besturingstools van Mendix.

Bij PowerApps is het beheer van de levenscyclus van toepassingen afhankelijk van oplossingen en externe CI/CD-tools, zoals Azure DevOps. Hoewel krachtig indien correct ingesteld, deze methode heeft kennis over meerdere platforms en doorlopend beheer van meerdere gereedschapsketens.

Kosten- en licentiemodellen

Mendix

Mendix hanteert op abonnementen gebaseerde prijzen vanaf ongeveer $998 per maand voor afzonderlijke toepassingen, plus maandelijkse kosten per gebruiker van 15 dollar.

Hoewel sommigen hogere initiële kosten als nadeel noemen, biedt het prijsmodel van Mendix een voorspelbare totale eigendomskosten zonder verrassingen op basis van het gebruik.

Het weerspiegelt ook de positionering van Mendix voor professionele ontwikkelingsteams die complexe applicaties bouwen. Organisaties die meerdere applicaties bouwen, kunnen profiteren van onbeperkte aanvraagplannen vanaf $2.495 per maand, plus €15 per gebruiker per maand. Dit maakt de kosten per toepassing op grote schaal concurrerender.

Bovendien kunnen klanten van het bedrijf onderhandelen over aangepaste prijzen voor grote implementaties. De kosten variëren op basis van de complexiteit van de app, het aantal gebruikers en de zakelijke vereisten voor de implementatie.

PowerApps

PowerApps maakt gebruik van een gelaagd licentiemodel dat schijnt toegankelijk maar kan meebrengen aanzienlijke verborgen kosten naarmate de complexiteit van de app toeneemt.

De meeste Microsoft 365-licenties bevatten beperkte PowerApps-mogelijkheden die beperkt zijn tot Standard-connectoren en SharePoint-gegevensbronnen. Premium-connectoren zorgen voor de licentiebehoeften per gebruiker voor elke app-functie, ongeacht hoe minimaal het premiumgebruik is. Voor toepassingen waarvoor SQL Server-connectiviteit, aangepaste connectoren of Dataverse nodig zijn, is onmiddellijk een maandelijkse licentie van 5 tot 20 dollar per gebruiker vereist.

Verdere kosten worden verhoogd door de capaciteit van Dataverse (USD 40 per GB per maand voor databasecapaciteit), overschrijdingen van API-aanvragen ($50 per maand voor 50.000 extra aanvragen) en speciale functies zoals Power Pages voor externe portals. Deze op gebruik gebaseerde add-ons kunnen de initiële kostenramingen vermenigvuldigen.

Ecosysteem voor de gemeenschap en ondersteuning

Mendix

Het Mendix-ecosysteem draait om zijn gecureerde marktplaats met duizenden vooraf gebouwde componenten, connectoren en toepassingsmodules. Deze marktplaats maakt hergebruik van code mogelijk en versnelt de ontwikkeling door middel van gedeelde bijdragen van de gemeenschap.

Daarnaast biedt Mendix Academy gratis trainingsmateriaal en certificeringstrajecten voor ontwikkelaars van verschillende vaardigheidsniveaus. Het platform is AI-ondersteunde ontwikkelingstools (MXAssist) helpt ontwikkelaars ook om de beste praktijken te volgen en dagelijkse ontwikkelingstaken te versnellen.

De ondersteuning van Mendix Pro omvat toegewijde klantensuccesteams en technisch accountbeheer voor klanten van het bedrijf. Dit zorgt voor een succesvolle platformaanpassing en voortdurende afstemming.

PowerApps

PowerApps profiteert van het enorme ecosysteem van Microsoft, met 56 miljoen maandelijkse actieve gebruikers op het hele Power Platform. Deze schaal creëert uitgebreide hulpmiddelen voor de gemeenschap, trainingsmateriaal en advieskennis van derden.

Het ondersteuningsmodel van Microsoft varieert aanzienlijk op basis van licentieopties. Basisondersteuning is inbegrepen, maar premiumondersteuning vereist extra investeringen.

Beoordelingen van Gartner & Forrester

Gartner-analyse

Beide platforms hebben Leidersposities in Gartner's Magic Quadrant voor low-code applicatieplatforms voor bedrijven.

In 2024 scoorde Mendix voor het tweede achtereenvolgende jaar het hoogst op „Ability to Execute”. Dit weerspiegelt het sterke succes van klanten en de volwassenheid van het platform.

Peer Inzichten van Gartner toont vergelijkbare gebruikerstevredenheid: vanaf juni 2025 handhaaft Mendix 4,5 sterren met 297 beoordelingen, terwijl PowerApps presteert 4,6 sterren met 342 beoordelingen.

Forrester-analyse

Microsoft wordt vermeld als leider in Forrester's 2025 Wave voor low-code-platforms voor professionele ontwikkelaars. Het behaalt topscores voor strategie en huidig aanbod. Forrester erkent de visie van Microsoft op basis van AI en de mogelijkheden op bedrijfsschaal.

Mendix geldt als een sterke artiest in dezelfde analyse. Het is bijzonder sterk op het gebied van datamodellering, projectbeheerverbindingen en versiebeheermogelijkheden voor professionele ontwikkelingsteams.

Welk platform is het beste voor jou?

Kies PowerApps als:

- Je organisatie handhaaft een diepgaande Microsoft 365-standaardisatie met SharePoint, Teams en Dynamics 365 als belangrijkste productiviteitstools.

- Uw belangrijkste toepassingen zijn eenvoudige workflows, het digitaliseren van formulieren en goedkeuringsprocessen

- De snelheid van de ontwikkeling heeft voorrang op de kracht van de app. Je kunt platformlimieten accepteren voor een snellere implementatie.

Kies Mendix als:

- Tot uw behoeften behoren complexe geïntegreerde toepassingen voor meerdere apparaten met slimme bedrijfslogica en brede systeemverbindingen.

- Beheer van de levenscyclus van toepassingen op lange termijn is belangrijker dan een snelle initiële implementatie.

- Verbindingsbehoeften omvatten systemen van meerdere leveranciers, met name SAP, Oracle of andere platforms van bedrijven die niet van Microsoft zijn.

Wanneer zou je beide kunnen gebruiken?

Veel grote bedrijven gebruiken beide platforms strategisch. PowerApps kan voldoen aan productiviteitsbehoeften van afdelingen en initiatieven van burgerontwikkelaars, terwijl Mendix zorgt voor de modernisering van het kernsysteem en complexe klantgerichte toepassingen.

Merk op dat deze hybride methode duidelijke controlegrenzen en verbindingsstrategieën tussen platforms vereist om datasilo's te voorkomen en de samenhang van het ontwerp te behouden.

Hoe CLEVR de implementatie van het platform kan ondersteunen

Samenvattend kan PowerApps bestaande investeringen van Microsoft maximaliseren en kleinschalige ontwikkeling versnellen voor beperkte gebruikssituaties. Ondertussen levert Mendix capaciteiten op bedrijfsniveau voor complexe, essentiële toepassingen waarvoor slimme bedieningselementen nodig zijn.

De complexiteit van uw app, verbindingsbehoeften en verantwoordelijkheden voor langdurige zorg bepalen wat de beste optie is. U moet deze low-code-platforms en andere verder evalueren op basis van de totale eigendomskosten, controlebehoeften en strategische afstemming in plaats van alleen de initiële licentiekosten.

Ter ondersteuning van deze evaluatie — en uiteindelijk de implementatie van uw platform — CLEVR biedt platformneutrale begeleiding ondersteund door diepgaande Mendix-expertise. CLEVR richt zich op het begrijpen van uw zakelijke vereisten, niet op het promoten van specifieke technologieën, om ervoor te zorgen dat welke oplossing u ook kiest, naadloos integreert met uw huidige systemen.

Wil je meer weten? Neem vandaag nog contact op met CLEVR.

Onderzoeksmethodologie

Deze analyse combineert gegevens uit Gartner Magic Quadrant-rapporten, Forrester Wave-beoordelingen, geverifieerde gebruikersrecensies van Gartner Peer Insights en volledige platformdocumentatie. De kostenanalyse is gebaseerd op openbaar beschikbare prijzen vanaf juni 2025, aangevuld met de daadwerkelijke klanteninstellingen in verschillende sectoren.

Verkeerd op elkaar afgestemde workflows: de echte belemmering voor slimme fabrieken

Robotica, digitale tweelingen, geavanceerde automatisering en opkomende technologieën zoals generatieve AI trekken enorme investeringen aan in de productiesector. Organisaties bouwen steeds meer verbonden ecosystemen van data, platforms en cyberfysieke systemen met het oog op naadloze interoperabiliteit en end-to-end zichtbaarheid.

Maar voor veel fabrikanten hebben deze initiatieven moeite om verder te gaan dan de proefversies, stoppen ze tijdens de uitrol van bedrijven of resulteren ze in gestandaardiseerde technologiestapels die niet de flexibiliteit hebben om zich aan te passen aan de unieke workflows van elke fabriek en elk bedrijf. Recent Deloitte onderzoek bevestigt deze paradox, waarbij wordt verwezen naar het beperken van operationele risico's, het aanpakken van lacunes in talent en vaardigheden en het op elkaar afstemmen van IT- en OT-prioriteiten tussen de belangrijkste boosdoeners.

Maar als de technologie werkt, waarom werkt de slimme fabriek dan niet?

Slimme productie vereist meer dan standaardisatie

Casestudies uit de sector consequent aantonen dat slimme fabrieken zowel haalbaar zijn als in staat zijn meetbare verbeteringen in efficiëntie, kwaliteit en capaciteit te realiseren. De digitale ruggengraat beheert op betrouwbare wijze de technische intentie, planning, kostenberekening en uitvoeringscontrole. De uitvoeringslaag biedt realtime operationeel inzicht van machines en systemen op de werkvloer. En opkomende technologieën zoals digitale tweelingen, IoT-platforms en AI verbeteren de prestaties verder door middel van geavanceerde analyses, simulatie en voorspellende intelligentie.

Organisaties ontwikkelen zich echter met verschillende snelheden, bepaald door verschillende niveaus van digitale volwassenheid, technische capaciteiten en bereidheid tot transformatie. De opslag vindt zelden plaats binnen individuele systemen. Het komt tussen beide naar voren, waarbij workflows engineering, planning, uitvoering en optimalisatie moeten verbinden tot een samenhangend, end-to-end bedrijfsmodel.

Gestroomlijnde platforms zijn weliswaar essentieel, maar zijn niet ontworpen om tegemoet te komen aan de volledige diversiteit aan workflows, productvarianten en bestuursstructuren die in verschillende fabrieken en bedrijfseenheden bestaan, waardoor slimme productie meer dan alleen een technologisch adoptieprobleem is.

Waar de optimalisatie van het productieproces faalt

Wanneer workflows niet volledig op elkaar zijn afgestemd, worden symptomen zichtbaar in PLM, ERP, MES/MOM en op de werkvloer, waardoor operationele frictie ontstaat, de besluitvorming wordt vertraagd en de consistentie van de dagelijkse uitvoering wordt ondermijnd.

1. Verkeerde uitlijning tussen engineering en productie

In productieomgevingen actualiseert engineering een ontwerp, variantconfiguratie of materiaallijst in PLM, maar de wijziging wordt niet automatisch weergegeven in de MES-werkinstructies of op de werkvloer. Operators blijven bouwen volgens verouderde specificaties, terwijl de ERP-planning nog steeds verwijst naar eerdere routeringen van componenten. Het resultaat is herbewerking, kwaliteitsafwijkingen en vertraagde leveringen, niet omdat systemen uitvielen, maar omdat de digitale draad tussen PLM, ERP en MES onvolledig is.

2. Hiaten in de planning versus de uitvoering

ERP geeft productieorders vrij op basis van verwachte capaciteit en voorraadaannamen, maar beperkingen in realtime (zoals beschikbaarheid van machines, slijtage van gereedschap of arbeidsverdeling) zijn alleen zichtbaar in MES of op de werkvloer. Zonder een gesynchroniseerde workflow tussen ERP en MES/MOM werken planners met verouderde gegevens, terwijl productieteams uitzonderingen handmatig beheren.

3. Zichtbaarheid op de werkvloer zonder bedrijfsintegratie

Sensoren en machinegegevens bieden uitgebreide operationele inzichten, maar afwijkingen die op de werkvloer worden vastgelegd, leiden niet altijd tot gestructureerde workflows in ERP-, kwaliteitsmanagement- of servicesystemen. Onderhoudsteams krijgen mogelijk meldingen te zien, maar de planning van reserveonderdelen, het bijhouden van kosten of de communicatie met klanten blijven verbroken.

4. Servicefeedback sluit de cirkel niet

Vooral voor machinebouwers worden inzichten van geïnstalleerde machines (zoals prestatiegegevens, terugkerende fouten, configuratieproblemen, enz.) niet systematisch teruggekoppeld aan de engineering in PLM. Als gevolg hiervan zijn productverbeteringen afhankelijk van informele communicatie in plaats van traceerbare, datagestuurde workflows gedurende de hele levenscyclus.

5. Verkeerde afstemming van IT/OT-governance tussen systemen

IT-teams standaardiseren architecturen voor PLM-, ERP- en bedrijfssystemen, terwijl OT-teams prioriteit geven aan uptime en lokale productiestabiliteit in MES- en winkelvloeromgevingen. Zonder duidelijk gedefinieerde workflows tussen systemen lopen integraties vast, omzeilen uitzonderingen het bestuur en verliezen digitale initiatieven hun geloofwaardigheid.

Orchestratie van de productieworkflow met low-code: PLM, ERP en MES/MOM en integratie op de werkvloer met elkaar verbinden

Gepositioneerd bovenop bestaande PLM-, ERP-, MES/MOM- en winkelvloersystemen, low-code stelt fabrikanten in staat om hun digitale ruggengraat, uitvoeringslaag en optimalisatietechnologieën te verbinden in één gecoördineerd bedrijfsmodel.

Deur op te treden als de bindweefsel tussen systemen transformeert low code technische interoperabiliteit in operationele interoperabiliteit, waarbij wordt gezorgd voor:

1. Realtime activeren van beslissingen in PLM, ERP en MES

Technische wijzigingen in PLM kunnen ERP-planningsparameters en MES-werkinstructies automatisch bijwerken, waardoor gesynchroniseerde uitvoering mogelijk is in plaats van handmatige afstemming en uitgestelde correcties.

2. Feedback over productie en service in een gesloten circuit

Machinegegevens, kwaliteitsafwijkingen en inzichten in de prestaties in het veld kunnen gestructureerde workflows weer in ERP en PLM activeren, waardoor een continue verbeteringscyclus ontstaat in plaats van geïsoleerde rapporten.

3. Operationele dashboards op maat gemaakt voor rollen en fabrieken

Met Low-code hebben fabrieksmanagers, planners en serviceteams toegang tot uniforme, rolspecifieke dashboards die ERP-, MES- en werkvloergegevens combineren, waardoor snellere, datagestuurde beslissingen in de dagelijkse bedrijfsvoering worden ondersteund.

4. Op uitzonderingen gebaseerde workflowautomatisering

In plaats van te vertrouwen op e-mails of handmatige escalaties, zorgen voor afwijkingen in de prestaties van de productie, inventarisatie van machines automatisch voor traceerbare workflows in verschillende systemen, waardoor de reactietijd en het uitvoeringsrisico beperkt worden.

5. Variant- en configuratiebeheer afgestemd op de uitvoering

Voor machinebouwers kunnen productvarianten en aangepaste configuraties consistent worden weergegeven, van PLM via ERP tot systemen op de werkvloer, waardoor herbewerking en vertragingen bij de levering tot een minimum worden beperkt.

6. Schaalbare integratie zonder kernsystemen te onderbreken

Fabrikanten kunnen de ERP-, PLM- en MES-mogelijkheden stap voor stap uitbreiden door nieuwe workflows en gebruiksscenario's toe te voegen naarmate de bedrijfsbehoeften evolueren, zonder hun bestaande technologielandschap te destabiliseren.

Bouw je slimme fabriek met de juiste strategische implementatiepartner

Low-code doet veel meer dan systemen verbinden. Het stelt fabrikanten in staat om operationaliseren van gegevens gedurende de gehele levenscyclus van het product en de productie, waarbij inzicht wordt omgezet in gestructureerde, meetbare actie.

Van engineering en planning tot productie en service, low code versterkt de manier waarop informatie door de hele organisatie stroomt. En bij SLIM, werken we samen met fabrikanten om dat potentieel te zetten in tastbare bedrijfsresultaten.

Met meer dan 30 jaar ervaring in het Siemens Xcelerator-portfolio en geavanceerde low-code-applicatieontwikkeling, overbruggen we strategie en uitvoering, waarbij we bewezen hebben dat industriële platforms verbonden zijn met de flexibiliteit die nodig is om zich aan te passen aan veranderende eisen. We beginnen met te bepalen waar waarde kan worden ontsloten in de hele operationele keten, en vervolgens ontwerpen en implementeren we op maat gemaakte workflows die PLM-, ERP-, MES/MOM- en winkelvloersystemen met elkaar verbinden. In plaats van uw organisatie te dwingen tot starre sjablonen, gebruiken we Mendix, het toonaangevende low-code-platform voor ondernemingen, om orkestratielagen te bouwen die zijn afgestemd op uw specifieke processen, bestuursmodel en groeiambities.

Met deze aanpak kunnen fabrikanten:

- Stem PLM-, ERP-, MES/MOM- en werkvloerprocessen af op gedeelde resultaten.

- Maak gebruik van bestaande Siemens Xcelerator-componenten en breid ze uit waar de standaardfunctionaliteit stopt.

- Ga consistent om met uitzonderingen en afwijkingen in teams en systemen.

- Ontwikkel workflows, op basis waarvan activiteiten, producten en strategieën veranderen.

Slimme fabrieken zijn gebouwd op basis van aangepaste workflows

Slimme fabrieken worden niet bepaald door de technologieën die ze gebruiken, maar door hoe goed workflows mensen, systemen en beslissingen op elkaar afstemmen. Zolang die afstemming niet bestaat, zullen zelfs de meest geavanceerde digitale initiatieven moeite hebben om blijvende impact te hebben.

Met de juiste strategische implementatiepartners kunnen fabrikanten deze uitdagingen echter overwinnen, systemen afstemmen op zakelijke ambities en hun activiteiten afstemmen op de specifieke prestatiedoelen die ze stellen voor groei, efficiëntie en innovatie.

Als u klaar bent om verder te gaan dan geïsoleerde initiatieven en een echt verbonden productieomgeving te creëren, neem contact met ons op voor een adviesgesprek om te onderzoeken hoe uw organisatie meetbare operationele waarde kan ontsluiten.

Controle terugwinnen in het tijdperk van generatieve AI

Je kunt gerust zeggen dat het een interessante tijd is om in te leven.

Dit blog erkent de bredere wereldwijde uitdagingen waarmee de mensheid wordt geconfronteerd, maar zoomt in op het potentieel van GenAI in het hedendaagse bedrijfstechnologielandschap. Het biedt een gefundeerd perspectief over hoe je de hype kunt overstijgen en AI kunt gebruiken om de doelstellingen van je bedrijf zinvol te ondersteunen.

Van ERP tot innovatie: het voorbeeld van de reis van een cookiebedrijf

Stel u voor, het is 2019 en uw bedrijf dat cookies verkoopt, heeft zojuist een migratie van SAP Business Suite naar SAP S/4HANA 'overleefd'. In de wetenschap dat je de beste cookies van Europa verkoopt, groeide het bedrijf uit tot een groot bedrijf met respect voor de eigen processen en manieren van werken. Een solide ERP-implementatie heeft je zeker geholpen om je te concentreren op wat je het beste weet: het produceren en verkopen van cookies.

Desalniettemin waren de regionale verschillen en 'verrukkelijke klantprocedures' die u een deel van uw succes hebben opgeleverd, sterk verbonden met uw kernimplementatie van SAP. Het team van ABAP- en Java-ontwikkelaars heeft meer dan anderhalf jaar besteed aan het migreren van de aangepaste bedrijfslogica van de vorige SAP-versie naar de nieuwe. Nieuwe zakelijke aanvragen werden meestal opgeschort, omdat ze de tijdlijn zouden verstoren.

Of ging het anders?

Opgeslagen vanuit Slowdown

Gelukkig is Gartner er vooral om ons te helpen de juiste koers te bepalen. Dat deden ze ook in 2016, waarbij ze pleitten voor concepten als „keep your core clean”. Dit idee werd omarmd door softwareleveranciers voor grote ondernemingen, zoals SAP, die uitbreidingsopties begonnen aan te bieden buiten hun kernsystemen, en sindsdien zelfs samenwerkten met Mendix als hun favoriete low-code-platform 2017.

Jouw bedrijf heeft niet alleen deze trend gevolgd. Het heeft het omarmd. Een partner bleek de meeste ERP-aanpassingen om te zetten in afzonderlijke, niet-geïsoleerde 'systemen van differentiatie en innovatie'. Voormalige ABAP-ontwikkelaars zijn overgestapt naar Mendix-consultants. En dankzij je diepe Microsoft-ecosysteem ontstonden er al snel burgerontwikkelaars in de hele organisatie. Omdat Power Apps standaard beschikbaar was voor alle werknemers, bleef de onvermijdelijke opkomst van moderne schaduw-IT niet ver achter (waarover ik zelf een boek zou kunnen schrijven).

Ondanks deze groeipijnen werden de voordelen duidelijk. ERP-updates werden niet langer geblokkeerd door verstrengeling van aangepaste code. Afdelingen begonnen elkaars digitale initiatieven en de snelheid waarmee ze werden uitgevoerd, op te merken.

Je CIO was ook tevreden. Een goed gedefinieerd bestuursmodel zorgde ervoor dat de apps die werden gebouwd niet alleen nuttig en intuïtief waren, maar ook veilig. Je begon de basis te leggen voor een moderne data-architectuur, inclusief een gedeelde catalogus van primaire data. En naarmate meer van uw onderscheidende processen overgingen op low-code apps, zou u hun gegevenssourcing geleidelijk kunnen overzetten van ERP naar een gecentraliseerd dataplatform.

Het resultaat? Low-code-teams hadden nu op consistente, vereenvoudigde manieren toegang tot gegevens van verschillende systemen, zonder dat ze diepgaande kennis van die back-endplatforms nodig hadden. Ze zouden meer tijd kunnen besteden aan het oplossen van echte problemen en minder tijd kunnen besteden aan het omgaan met technische complexiteit.

Zo ziet een composteerbare onderneming er in veel opzichten uit. Nogmaals bedankt, Gartner!

Wacht... Ging dit niet over AI?

Laten we, voordat we daar op ingaan, benadrukken wat uw cookiebedrijf nu leuk vindt: kleine teams en duidelijkheid in een complexe wereld.

Als Mendix Consultant had ik het voorrecht om met veel verschillende klanten van alle groottes en leeftijden te werken. Een ding dat tot nu toe in mijn ervaring opviel, is dat projecten die integreerden met grote systemen zoals ERP of CRM, vergeleken met projecten waar al een integratie- en/of dataplatform bestond, vaak het volgende vereisten:

- Grotere teams

- Langere leveringscycli

- Bredere kennis over de interne werking van die systemen en procedures

Waarom? Omdat verantwoordelijkheden niet voldoende geïsoleerd konden worden. Dit introduceert de behoefte aan meer mensen om de bredere zakelijke en technische context te begrijpen. En in een steeds complexere wereld is dit je echte uitdaging.

AI is standaard modulair

Ik ben erg blij om te zien dat de meeste 'GeNai magic' die we in productie zien, standaard modulair is of kan zijn. Vooral met AI van het agentschap, wat een echte doorbraak is gebleken, deel je het op in:

- Een centrale bouwsteen van een agentschap die 'begrijpt' welke tools ze kunnen gebruiken en welke bronnen ze kunnen raadplegen

- Een LLM (Large Language Model) ter ondersteuning van de besluitvorming, redenering en orkestratie

- Een geheugen in de vorm van een mechanisme en een datastore om eerdere vragen en antwoorden op te slaan

- Goed gedefinieerde, vooraf geconfigureerde en geparametriseerde aanwijzingen voor duidelijke instructiepaden

Om een misverstand uit de weg te ruimen: de tools die beweren dat hun AI voor jou persoonlijk is getraind, verhogen de waarde enigszins. De eerlijkere verklaring is dat deze tools goed zijn in het traceren van historische vragen en antwoorden, en deze historische interacties op een slimme manier aan je 'nieuwe' prompt geven wanneer dat nodig is.

Het goede nieuws is dat het voor de meeste toepassingen heel goed mogelijk is om dit zelf te bouwen, met als voordeel dat je de volledige controle hebt. En met Mendix, je hebt al de basislaag om aan de slag te gaan.

Wat „" Being in Control "” eigenlijk betekent”

Controle is niet binair, maar contextueel. Wat essentieel is voor uw bedrijf kan verschillen van geval tot geval en vergeleken met andere bedrijven. Dit zijn de belangrijkste controlegebieden waarmee u rekening moet houden:

1. LLM-keuze

Met de meeste kant-en-klare tools kun je geen favoriete LLM kiezen. Dit hoeft niet per se een probleem te zijn (omdat het een kwestie van eenvoud is), maar wel wanneer je controle wilt hebben over welke gegevens je met welke partij deelt. Bovendien kiezen tools misschien niet de allerbeste LLM op de markt om uw gebruiksscenario te ondersteunen, wat in het huidige klimaat van week tot week kan veranderen.

2. Locatie van de gegevens

Nauw gerelateerd aan de LLM-keuze, worden verschillende LLM's of tools alleen gehost in de VS of andere delen van de wereld. Hoewel steeds meer leveranciers pure EU-implementaties aanbieden, heb je misschien volledige controle nodig over waar je gegevens worden opgeslagen en of je LLM wordt beheerd door een commerciële partij of door een bedrijf waarmee verregaande afspraken kunnen worden gemaakt.

3. Distributie van tokens

De meeste AI-tools bieden specifieke functionaliteit voor een maandelijks bedrag per persoon. Dit kan leiden tot aanzienlijke maandelijkse investeringen, vooral in grote bedrijven, terwijl misschien niet alle werknemers de tools ten volle benutten. Hier is het handig wanneer u LLM-tokens voor het hele bedrijf kunt toewijzen en het gebruik ervan over afdelingen kunt verdelen. Zo kunt u de financiële impact effectiever volgen en de verwachte businesscase gedetailleerd evalueren.

4. LLM-monitoring

Iedereen die ooit een van de chatinterfaces van de grote LLM-bedrijven heeft gebruikt, weet dat modellen kunnen hallucineren en kunnen gaan afdwalen. Wanneer u de controle hebt over de afzonderlijke modules die uw oplossing vormen, kunt u de effectiviteit van uw oplossing op een gedetailleerde manier controleren. Bijvoorbeeld Datadog stelt u in staat om alle vragen en antwoorden te controleren en de kwaliteit, het tokengebruik en de afwijkingen op een geautomatiseerde manier te analyseren.

5. Kennis

Af en toe hoor je iemand zeggen: 'elk bedrijf wordt een IT-bedrijf'. Aangezien hierover zeker kan worden gedebatteerd, is er een soortgelijk gezegde voor het gebruik van AI in uw bedrijf. Alle kennis die je opdoet over de interne werking zal je helpen om weloverwogen beslissingen te nemen in de toekomst met minder afhankelijkheid van een toenemend aantal externe partijen.

Het dilemma tussen kopen en bouwen

Als je erover begint na te denken om zelf iets te bouwen, laten we realistisch zijn: het is morgen niet productieklaar. Maar het zou bijvoorbeeld volgende maand kunnen zijn. En in de huidige economie van snelheid moeten we het dilemma tussen kopen en bouwen erkennen.

AI-tools kopen kan verleidelijk zijn. Ze beloven een snelle start, leveranciersondersteuning, gegevensprivacy (als je geluk hebt) en soms zelfs regionale hosting. Voor ongeveer €20 per gebruiker ga je naar de races en voor een extra 'enterprise fee' krijg je ook SSO-ondersteuning. De tool gebruikt waarschijnlijk de meest betaalbare of efficiënte LLM achter de schermen en verzekert je dat je gegevens niet worden gebruikt om hun modellen te trainen.

Klinkt goed, toch?

Maar hier beginnen de kleine lettertjes. Deze tools hebben vaak beperkte zichtbaarheid, weinig ruimte voor aanpassingen en zeer weinig hefbomen om de eigenlijke onderliggende technologie te besturen. Wanneer de tokenprijzen dalen of er een goedkopere LLM beschikbaar komt, gaat het voordeel meestal naar de leverancier, niet naar u. En net als u erop begint te vertrouwen, kunnen ze de prijzen verhogen of het product stilletjes helemaal onderbreken.

Eenhoorns moeten ergens beginnen, maar veel van deze tools bestaan nog maar een paar maanden en worden beheerd door hele kleine teams. Dit is op zijn minst de moeite waard om in de vergelijking op te nemen.

Dus, wat is het alternatief?

De pragmatische routekaart

Laten we dit alles tot slot vertalen in een praktische, realistische routekaart, die geschikt is voor bedrijven van elke omvang. De kernboodschap is eenvoudig: begin klein, leer snel en evalueer voortdurend de beste manier van handelen, altijd met een langetermijnstrategie in het achterhoofd. Net zoals we dat doen voor onze klanten die Mendix gebruiken.

Stap 1: Experimenteer en blijf op de hoogte

Laat je medewerkers experimenteren met tools die ze vinden. Omdat er elke dag nieuwe tools verschijnen, is het onmogelijk om dit bij te houden met een gecentraliseerde afdeling. Daarnaast zullen uw werknemers ze waarschijnlijk toch gebruiken. Mensen informeren over veilig gebruik (experimenteren zonder gevoelige gegevens te delen) en enthousiaste mensen in staat stellen hun tools binnen het bedrijf te promoten. Dit is een uitgelezen kans om ook uw veiligheidsbeleid en -educatie in het algemeen bij te schaven.

Stap 2: Stel een Buy-vS-Build-besluitvormingsproces op

Zorg voor een goed intern proces zodat werknemers use-cases of tools kunnen indienen en andere werknemers over deze use-cases kunnen laten stemmen (als de grootte van uw bedrijf dit vereist). Probeer dit te integreren in het algemene software-inkoopproces, maar probeer knelpunten te vermijden die geautomatiseerd of vooraf kunnen worden opgelost. Gebruik een beslisboom voor uw bedrijf om te kiezen tussen het kopen of bouwen van de AI-oplossing.

Stap 3: Omarm platformdenken

Zoals beschreven in dit artikel is de modulaire aanpak van (agentische) AI beschikbaar en uitvoerbaar. Het is zelfs nog waarschijnlijker dat de meeste componenten al aanwezig zijn. Gebruik de allereerste use case (oké, misschien de tweede) om te beginnen met het itereren van je benadering van 'embedded AI' in het softwarelandschap van je bedrijf. Met duidelijk eigenaarschap, scheiding van zorgen en solide bestuur. Net als bij andere technologieën die het bedrijf vandaag ondersteunen. Je kunt het; als je hulp nodig hebt, helpt een partner je graag.

Agentic AI is er. Ga het maken.

Het potentieel van Agentic AI valt niet te ontkennen, en wat mij het meest boeit, is hoeveel controle organisaties kunnen uitoefenen zonder onnodige complexiteit toe te voegen. We beginnen niet vanaf nul; we bouwen voort op decennia van technologische vooruitgang, waaronder de opkomst van configureerbare bedrijfsarchitecturen en het essentiële onderscheid tussen registratiesystemen en systemen van differentiatie en innovatie.

Door deze bredere lens gezien hoeft AI geen kwetsbare afhankelijkheid te worden. In plaats daarvan kan het een krachtige enabler zijn, als u de juiste tools en de juiste partners kiest om u te begeleiden.

Agentic AI is er al, en zinvolle vragen stellen over je eigen data is niet langer hogere wiskunde.

Met Mendix kunt u dit potentieel op een duurzame, gecontroleerde en snelle manier benutten, terwijl u de vrijheid behoudt om u aan te passen en te kiezen wat het beste werkt voor uw bedrijf.

Niet alleen voor nu, maar ook voor de komende tien jaar.

Oorspronkelijk gepubliceerd hier.

Frequently Asked Questions

Which industries does CLEVR serve?

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

How does CLEVR support digital transformation?

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

What is CLEVR's experience and reach?

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Who are some of CLEVR's notable clients?

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.